How edge analytics can save valuable time of your data scientists

Your data scientists are among your most valuable assets. But if you are not making full use of edge analytics then you could be wasting their time.

If yours is like most companies, then when you embark on an industrial IoT project your expectation is that it will help improve efficiency and profitability. All that incoming data, you surmise, will help you run a leaner, meaner business. But in reality, it could do the opposite.

The reason is that it’s not only about that data. Successful industrial IoT projects are not just about sensing and monitoring, but also about making data useful to your business and making sure it is used optimally.

The people who do this are your data scientists: highly trained, hard-to-find specialists, who are worth their weight in gold. According to the employment data site Glassdoor, the average wage for a data scientist is almost $121,000 a year, compared to $66,000 for a programmer.

And what do these expensive experts do?

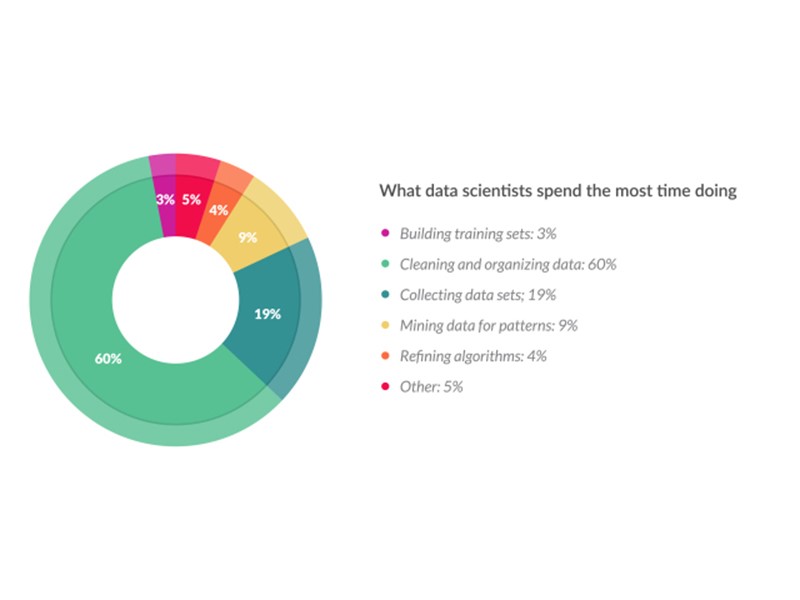

Between 60% and 80% of the time, customers tell us, they are just cleaning and managing data: making sure it is ready for analysis. According to research, they spend just 9% of time mining for patterns and 4% refining algorithms.

This is like paying your legal team to clean out your stationery room: it’s necessary, but it doesn’t take a highly skilled professional to do the job. In fact, it doesn’t take a professional at all.

Edge analytics to the rescue

Edge analytics to the rescue

Most of the data cleaning and management that occupies scientists’ time can easily be carried out automatically by edge analytics software. This includes the functionality for pre-processing IoT data in real time, effectively turning big data into small and relevant data.

Key tasks that you can achieve with edge analytics include:

- Data cleaning, including removing outliers and smoothing out noisy data.

- Data normalization and standardization, making sure all your data looks the same, so it can be fed directly into dashboards and other analytical tools.

- Data enrichment, including adding metadata, timestamps and other information to your sensor data.

- Flow control functions, such as throttling and joining data from several sensors in the same payload, or splitting data if needed.

- Data reduction and anomaly detection, for instance, to make sure that only relevant data is being sent to the cloud and in an aggregated, filtered format unless there is an anomaly.

- Data integration, to add data to your preferred enterprise or cloud-hosted analytics system in streaming format or in file format, depending on your preference.

How much is it worth to carry out these tasks at the edge? Consider that according to Baseline Magazine, 40% of data scientists currently do not have enough time in the day to even do analysis.

And while data scientists are a happy group, with 79% claiming to be satisfied at work, it is fair to say cleaning data is not their favorite task: 58% said they spend too much time doing it, and more than 50% said what they really enjoy is predictive analysis and data mining.

Happier data scientists. Lower software cost. And more

If you use technology to offload menial data cleaning and management to the edge, you not only potentially free up about two-thirds of an average data scientist’s day but also help them spend more time doing the things they like.

This, in turn, could reduce staff turnover in your data science team while at the same time allowing your business to achieve deeper, more meaningful insights from your IoT deployment. Plus, there is one final economic benefit.

Most databases and analytics systems charge by the amount of data they have to store or process. If most of that data is not being used, then you could end up wasting a significant portion of your budget.

With smart edge analytics, on the other hand, you make sure that the only data that gets to your cloud systems is fully relevant. This means you make the most cost-effective use of cloud resources. It doesn’t take a data scientist to figure out that this makes sense.

More about Industrial Engineering and Data Analytics

![]() This article was written by Göran Appelquist, currently, CTO at Crosser. Goran has 20+ years of experience of different leading positions in high-tech companies. Crosser is a start-up that designs and delivers the industry’s first pure-play real-time software solution for Fog/Edge Computing architectures. Originally the article was published here.

This article was written by Göran Appelquist, currently, CTO at Crosser. Goran has 20+ years of experience of different leading positions in high-tech companies. Crosser is a start-up that designs and delivers the industry’s first pure-play real-time software solution for Fog/Edge Computing architectures. Originally the article was published here.